The 0-1 Knapsack Problem

The knapsack problem is textbook material in fields like computer science, mathematics, operations research, etc., and I find it compelling for two main reasons. First, it is easy to describe in words, yet not so easy to solve. Second, in addition to being textbook, it is applicable to a variety of everyday situations.

So what is the knapsack problem? Imagine you are going on a trip and need to pack a suitcase. After laying out everything you want to take on the bed, you realize it cannot all possibly fit in the suitcase. To determine which items to take and which to leave, you ascribe some amount of worth (monetary value, utility, etc.) to each item and then measure its size. The nature of the task is then simple – given the limited size of the suitcase, which items do you pack to maximize the overall worth it contains?

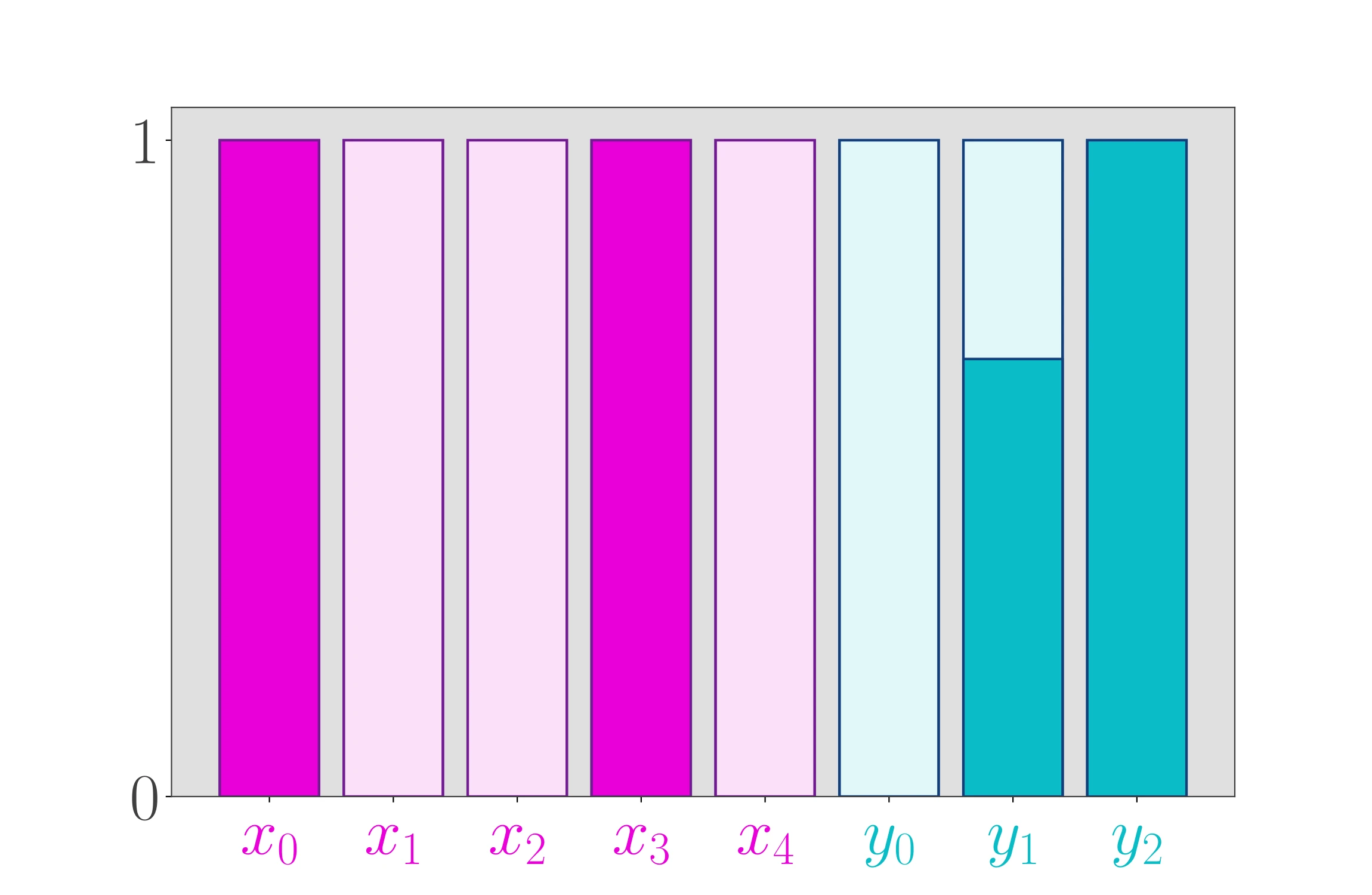

This particular scenario is an instance of the 0-1 knapsack because there is exactly one of each item, and each item either stays at home (0) or goes in the suitcase (1). There are other variants of the knapsack problem. In the general discrete knapsack problem, you consider groups of like items in contrast to considering each item individually. For instance, if you have 8 identical shirts and 3 identical pairs of pants, how many of each do you pack to maximize the value of your suitcase? Another variant is the continuous knapsack problem in which items need not be kept whole. Of course, it doesn’t make any sense to pack two-thirds of a shirt. The continuous knapsack problem does not apply to this scenario which is a shame as it is much, much easier to solve!

In my opening, I asserted the knapsack problem can be employed in many situations. Going to the grocery store on a fixed budget? Knapsack. Figuring out which chores to knock out on a free Saturday afternoon? Knapsack. Filling a pick-six of beer? You guessed it, knapsack. Each of these situations involves selecting some subset of items (groceries, chores, beers) to maximize a total value (food in cart, work completed, tastiness) given some limitation (money, time, space).

Understanding the problem is one thing, understanding how to solve it is another. It is possible to formulate the problem as an integer program and solve with general methods like branch-and-bound. However, and skirting the details, such methods are not efficient in comparison to this post’s featured method – dynamic programming. With dynamic programming, the challenge is to structure the problem in such a way that it can be broken down into progressively smaller, easier subproblems, then build the solution from the bottom up using recursion.

Applying this to the suitcase packing situation, the desired structure can be realized by considering each item one at a time. For each item considered, two subproblems are formed. First, assume the item is not packed. Under that assumption, the remaining space in the suitcase is unchanged – what is the optimal selection of remaining items? And second, assume the item is packed and thus takes up space in the suitcase. Again, what is the optimal selection of remaining items (given the reduction in the available suitcase space from having packaged the item). Repeat and repeat until all items have been considered, but there is a catch. Each new item presents a ‘fork in the road’ so to speak; such is the nature of recursion. If there are 3 items, this problem is not hard, as there are only 8 possibilities to consider. However, if there are 10 items from which to choose, there are over 1000 possibilities!

The code below is an efficient implementation of a dynamic programming solver for the 0-1 knapsack problem. For the remainder of this post, I will break down the design of the implementation and offer a functionally equivalent, but slower alternative that is more explicit in its behavior.

|

|

You might find it curious that I have defined a function inside a function here. In Python,

everything is an object, even functions! It is common to refer to these as outer and inner

functions, and the inner function is just an object that exists strictly in the scope of the outer

function. Over time, I’ve come to appreciate the value of this paradigm when it comes to

implementing recursive functions. Note that over the course of the algorithm, sizes and values

never change. But if you had implemented the algorithm as two separate functions, those variables

would have to be passed again and again at each level of recursion. Seems silly. This way, those

variables only have to be passed once to the outer function, and they are accessible to both the

outer and inner functions. There are some stylistic reasons to prefer this implementation;

however, the main motivation is that memoization can be easily leveraged.

Memoization involves storing subproblem solutions so they may be quickly retrieved if and when the

same subproblem arises again later. This is in contrast to recomputing the solution from scratch.

At most, the number of subproblems is the number of items multiplied by the size of the suitcase

(assuming the suitcase has an integer-valued capacity and each of the items has an integer-valued

size). The option to enable memoization is an argument of the outer function. If enabled, a

memoization table is initialized by means of the lru_cache decorator imported from functools

(which is part of the Python standard library). The ’lru’ stands for ’least-recently used’.

This means that, generally, the cache will delete previously found solutions to free space for more

recently found solutions when the cache gets full. However, the cache is initialized with enough

storage to retain the solutions to all subproblems, thus guaranteeing that each subproblem is

solved at most once. If disabled, the cache is initialized with zero storage.

The overall problem is solved in the outer function. First, the cache is cleared and an initial solution is setup. Then, the items are assessed in reverse order. For each item $i + 1$ , if the best solution for items 1 through \(i\) is the same as the solution for items 1 through \(i + 1\), that indicates that item \(i + 1\) ought not be packed. The total value of selected items, what those items are, and the memoization information are all returned and in that order.

And that’s that. In all honesty, I surprised myself with how few lines of code are needed to solve this problem. Now let’s solve an instance of the 0-1 knapsack problem. Supposing you have opened a Python shell and loaded the knapsack solver function, you can test it with the following.

>>> sizes = [7, 9, 5, 12, 14, 6, 12]

>>> values = [3, 4, 2, 6, 7, 3, 5]

>>> capacity = 20

>>> z, x, info = good_knapsack_solver(values, sizes, capacity)

>>> print(z, x)

10 [0, 0, 0, 0, 1, 1, 0]

>>> print(info)

CacheInfo(hits=16, misses=56, maxsize=140, currsize=56)For this instance, the most value that can be packed is 10 and that can be realized by packing the 5th and 6th items only. By default, memoization is enabled. The size of the knapsack is 20, and there are 7 items, so the cache is built to store up to 140 solutions. A total of 56 + 16 = 72 calls to the inner function are required to solve this problem. And 16 of those calls are resolved by simply retrieving an already stored solution. Not bad. How does that compare to having memoization disabled?

>>> z, x, info = good_knapsack_solver(values, sizes, capacity, memoize=False)

>>> print(z, x)

10, [0, 0, 0, 0, 1, 1, 0]

>>> print(info)

CacheInfo(hits=0, misses=250, maxsize=0, currsize=0)As expected, the solution is the same, and it is good to verify that. However, with memoization disabled, the number of inner function calls more than triples by going up to 250.

In case a part of the code is unclear, I present an alternative implementation of the algorithm

that uses simpler structures. This implementation uses neither decorators nor inner functions. The

core function of the algorithm is, however, still recursive. The cache is implemented as a simple

class with a convenient __repr__ (i.e. string representation) method.

|

|

The main differences are the introduction of MyCacheInfo to handle and report on memoization and

the changes to _knapsack to explicitly handle memoization. The body of the solver is just about

the same. Though this implementation is functionally equivalent, it has significantly worse

performance. To reiterate, the purpose of presenting this alternative form is to demystify the

machinery used in the ‘good’ solver, not to promote its adoption. The performance difference can be

quickly observed by using the magic %timeit function from the enhanced

IPython shell. For example,

In [1]: from knapsack import (good_knapsack_solver, okay_knapsack_solver,

values, sizes, capacity)

In [2]: good_knapsack_solver(values, sizes, capacity, memoize=True)

Out[2]:

(10,

[0, 0, 0, 0, 1, 1, 0],

CacheInfo(hits=16, misses=56, maxsize=140, currsize=56))

In [3]: %timeit good_knapsack_solver(values, sizes, capacity, memoize=True)

45.7 µs ± 96.8 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

In [4]: okay_knapsack_solver(values, sizes, capacity, memoize=True)

Out[4]: (10, [0, 0, 0, 0, 1, 1, 0], MyCacheInfo(hits=16, misses=56)

In [5]: %timeit okay_knapsack_solver(values, sizes, capacity, memoize=True)

58.2 µs ± 85.4 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)As shown for this particular instance, the performance of the ‘good’ solver is about 20% better (45.7µs vs. 58.2µs). This will vary from instance to instance, but the ‘good’ solver generally outperforms the ‘okay’ solver when memoization is enabled.

To recap, the knapsack problem is about selecting a subset of items in the presence of an option-limiting constraint. The problem can be solved with general methods, but dynamic programming is relatively efficient. The presented implementation uses a decorated inner function and in so doing keeps the implementation clear and concise while maintaining a superior performance to those developed more ‘by-hand’ or without memoization.

If you have any comments, queries, or critiques, drop a line in the comments below! In the meantime, happy problem solving!

You May Also Like

Python Tools for Math Modeling

This past week, I contemplated a number of math modeling applications …

The Mixed Integer 0-1 Knapsack Problem

Note: Thanks to Shraddha Ghatkar for pointing out an error in my …

The 0-1 Knapsack Problem, Revisited

When I first started this blog in 2019, one of my first posts was …