PEP572: Assignment Expressions

This past week saw the debut of PEP572 in the release of Python 3.8.0. A PEP is a Python Enhancement Proposal, a document that describes a feature and requests its incorporation into the Python language. Now PEP572 in particular was about as controversial as they come, so much so that it caused Guido von Rossum, the original author of Python, to step down from his role as Benevolent Dictator for Life and form a committee to replace him. Yikes!

What is PEP572?

So why the controversy? Well, the aim of this PEP was to introduce the walrus operator := to

perform assignments in ways that were previously not possible, e.g., inside a tuple declaration

or in a while-loop condition.

Oh, and in case you were wondering, the walrus operator is named so for its resemblance to the

eyes and tusks of a walrus. You can see it if you squint. Anyhow, not such a radical idea, is it?

Well, there are those with this or that argument against it like “it detracts from readability”

or “it is only useful as a shim to poorly implemented libraries” or “it will not be backward

compatible with older versions of Python 3.x”. Despite the merits of these arguments, PEP572 was

accepted and implemented for better or for worse.

I’ve been tracking PEP572 for a while. Not since its inception, but a while. And now that it’s here in the official Python 3.8 release, I figured it could use a little promotion. Here’s a taste.

|

|

This example is intuitive, but not very useful in practice. It is inferior to a more readable equivalent that ignores a value using the conventional underscore.

|

|

These snippets both result in the tuple ('foo', 'bar', 'baz') being assigned to z

and the strings 'foo' and 'bar' being assigned to x and y, respectively.

Newton’s Method

In my opinion, the best use of the walrus operator is to reduce code size in initialize-iterate-reassign patterns. In this section, I illustrate that benefit in the context of an implementation of Newton’s method.

Newton’s method is an iterative numerical method for finding roots of functions. A root of a function is a constant that satisfies . That is, plug into the function, and comes out.

Roots are sometimes trivial to find. For example, suppose . By inspection, we find the root by setting and solving for to get . Newton’s method really shines when the roots are difficult to find analytically or when multiple roots exist.

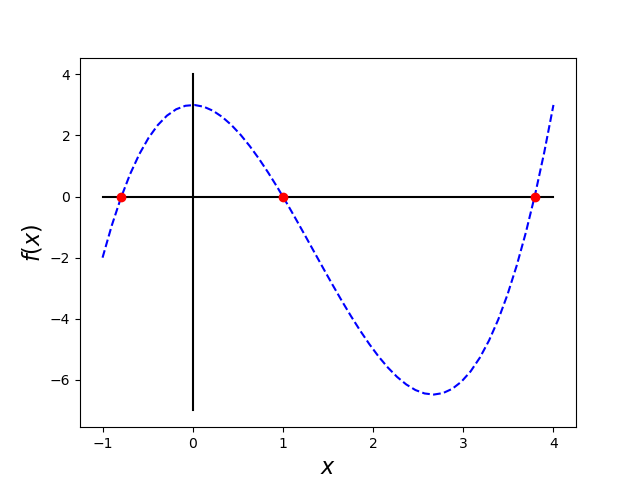

For the rest of this article, let’s consider the 3rd-order polynomial function

with derivative

.

Here is its graph.

In Python, here is how we define this function and its derivative. Later, these functions will

be passed as objects to our implementations of Newton’s method. Remember that virtually everything

in Python is an object, even a function!

In Python, here is how we define this function and its derivative. Later, these functions will

be passed as objects to our implementations of Newton’s method. Remember that virtually everything

in Python is an object, even a function!

|

|

A bit of quick guess-and-check or a look at the graph tells us the value is a root of this function. But a 3rd-order polynomial can have as many as 3 roots! As we can see, this one has exactly 3 roots and the remaining two are not nearly as easy to calculate. So we employ a simple form of Newton’s method that is based on the following recurrence relation:

Below is a code implementation of Newton’s method that does not use the walrus operator.

|

|

This code is functionally correct. It is, however, a little clunky. The logic that is used to

initialize x_next is repeated inside in the for-loop to iterative update x_next.

To reduce code size, we might try a slightly different implementation that still does not include the walrus operator.

|

|

bad_newtons_method.

If only there were a happy medium between the first and second implementations, something both

terse and computationally efficient…

The Walrus Operator

Ask and you shall receive.

|

|

Ah, beautiful! Notice the walrus operator in the middle of the while condition. Not only does

this code perform just as well as the first implementation, it is more compactly written

than either of the previous two examples. And yes, this code is also functionally correct.

Now, let’s use what we’ve built!

|

|

Using the carefully handpicked initial guesses of and , we ascertain that the roots are roughly , and . If we look back to our graph of this function, we can visually verify these roots. Neat!

Like what you learned? Did I make a mistake? Let me know in the comments! Happy root-finding!

You May Also Like

Python Tools for Math Modeling

This past week, I contemplated a number of math modeling applications …

The 0-1 Knapsack Problem

The knapsack problem is textbook material in fields like computer …

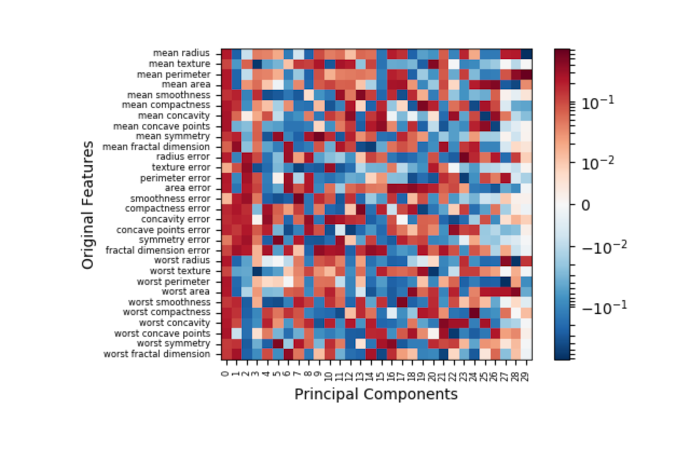

Principal Component Analysis

Recently, I worked on a project that necessitated data exploration and …